GAN vs Stable diffusion alternatives | Dall-e-2 AI tool for image

In the dynamic landscape of artificial intelligence, the quest for innovative image generation technologies has given rise to a diverse array of solutions. This article delves into the fascinating realm of Stable Diffusion alternatives, exploring the groundbreaking capabilities of the DALL-E-2 AI tool by AiToolsKit.ai. As we navigate the intricate nuances of AI tools for image creation, a pivotal comparison between GAN vs Stable Diffusion unfolds, unraveling the distinctive strengths and applications of each. Join us on this exploration of the AI Art Image Generator landscape, where the interplay between Stable Diffusion alternatives and the powerful DALL-E-2 AI tool reshapes the contours of creative expression.

|

I. Comparison of Technology: GAN vs Stable Diffusion

II. What are the Stable Diffusion Alternatives?

III. About DALL-E-2 AI Tool of AiToolsKit.ai

IV. How AI Tool for Image Generation is Useful?

V. Technologies Used in AI Art Image Generator

|

Comparison of Technology: GAN vs Stable Diffusion

We are going to compare GAN vs Stable diffusion in this paragraph. In the ever-evolving landscape of artificial intelligence, two prominent technologies, Generative Adversarial Networks (GAN) and Stable Diffusion, have emerged as powerful tools for image generation. GANs leverage a competitive setup between a generator and a discriminator, producing high-quality images through adversarial training. On the other hand, Stable Diffusion focuses on refining the diffusion process, providing a unique approach to generate realistic images. The key distinction lies in their methodologies; while GANs emphasize adversarial learning, Stable Diffusion centers around the controlled diffusion of information.

GANs: Pioneering Adversarial Learning

Generative Adversarial Networks, or GANs, have become synonymous with cutting-edge image generation. By employing a generator to create images and a discriminator to evaluate their authenticity, GANs iteratively refine their models. This adversarial learning process results in remarkably realistic images, making GANs a staple in various AI applications.

Stable Diffusion: Controlled Information Propagation

Stable Diffusion diverges from GANs by focusing on the stable diffusion process. It introduces controlled noise to images in a progressive manner, allowing the model to iteratively refine the generated content. This nuanced approach offers stability and control, making Stable Diffusion an intriguing alternative for those seeking a different paradigm in image generation.

Comparative Analysis: Strengths and Weaknesses

GANs excel in producing high-resolution images with intricate details, but they may suffer from training instability. On the contrary, Stable Diffusion offers better stability but might struggle with capturing fine details. A comprehensive analysis of these strengths and weaknesses aids in selecting the most suitable technology for specific use cases.

Use Cases: GANs vs Stable Diffusion

While GANs are prevalent in tasks like image-to-image translation and style transfer, Stable Diffusion finds its niche in scenarios requiring controlled generation, such as medical image synthesis. Understanding the unique applications of each technology is crucial in making informed decisions for diverse AI projects.

What are the Stable Diffusion Alternatives?

Stable Diffusion, though innovative, is not the sole contender in the realm of image generation. Several stable diffusion alternatives offer distinct approaches, catering to different preferences and requirements. Exploring these alternatives provides a comprehensive perspective on the evolving landscape of AI image generation.

Variational Autoencoders (VAE): Probabilistic Generative Models

VAEs introduce a probabilistic framework for generative modeling. By encoding input images into a latent space, VAEs enable the generation of diverse outputs. While they may not match GANs in terms of image quality, VAEs excel in generating diverse and novel content.

Neural Style Transfer: Artistic Image Synthesis

Neural Style Transfer focuses on merging content from one image with the artistic style of another. This technique allows for the creation of visually stunning and stylized images. While not a direct alternative to Stable Diffusion, it caters to artistic applications requiring style infusion.

AutoRegressive Models: Sequential Generation

AutoRegressive models generate images sequentially, one pixel at a time. Notable examples include PixelRNN and PixelCNN. While computationally intensive, these models offer fine-grained control over the generation process, making them suitable for specific use cases.

Flow-Based Models: Preserving Data Distribution

Flow-based models, such as RealNVP and Glow, focus on learning the data distribution directly. These models ensure that the generated samples align closely with the training data distribution. While they may lack the sheer speed of diffusion models, they excel in fidelity.

Hybrid Approaches: Merging the Best of Both Worlds

Some alternatives adopt hybrid approaches, combining the strengths of different techniques. For instance, models merging GANs with VAEs aim to leverage the benefits of adversarial learning and probabilistic generative modeling. Exploring these hybrid models offers a versatile approach to image generation.

About DALL-E-2 AI Tool offered at AiToolsKit.ai

In the ever-evolving landscape of AI tools, AiToolsKit.ai introduces the groundbreaking DALL-E-2 AI Tool, a sophisticated AI tool designed for image generation. Building upon the success of its predecessor, DALL-E, this tool pushes the boundaries of creativity by allowing users to generate diverse and imaginative images based on textual prompts. Whether you're a designer seeking inspiration or a content creator looking to add a unique touch to your projects, DALL-E-2 proves to be a versatile and powerful ally.

Advanced Image Synthesis with Textual Prompts

DALL-E-2 stands out by enabling users to input textual prompts, which the model translates into vivid and creative images. This advanced image synthesis capability empowers users to explore a wide range of concepts, making it an invaluable tool for creative professionals.

Unleashing Creativity Across Industries

From graphic design and advertising to content creation and conceptual art, DALL-E-2 finds applications across various industries. The tool's ability to transform textual ideas into visually striking images offers a novel and efficient way for professionals to express their creativity.

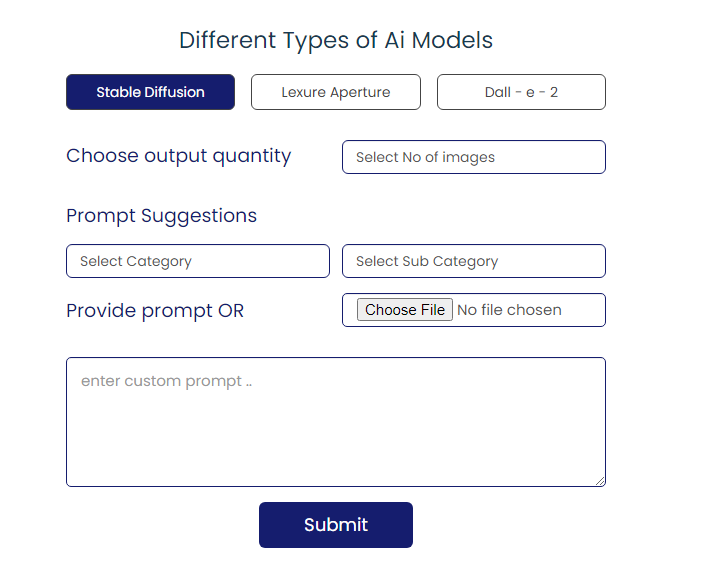

Intuitive User Interface for Seamless Interaction

AiToolsKit.ai prioritizes user experience, and DALL-E-2 reflects this commitment with its intuitive user interface. Navigating the tool is user-friendly, ensuring that both seasoned professionals and newcomers can harness the power of AI for image generation effortlessly.

Customization and Control: Tailoring Output to Your Needs

DALL-E-2 doesn't just stop at generating images; it allows users to customize and control various aspects of the output. Adjusting parameters, styles, and details provides a level of creative control that sets DALL-E-2 apart as a tool that adapts to the unique requirements of each user.

Integration Capabilities: Seamlessly Fit into Workflows

AiToolsKit.ai understands the importance of seamless integration into existing workflows. DALL-E-2 is designed with compatibility in mind, ensuring that it can be effortlessly integrated into diverse creative processes, making it an asset for professionals across different domains.

How AI Tool for Image Generation is Useful?

The emergence of AI tool for image generation has revolutionized the creative landscape, offering unparalleled benefits and efficiencies. These tools leverage advanced algorithms and models to streamline the creative process, providing users with a wealth of possibilities. Understanding the utility of AI tools for image generation unveils their transformative impact on various industries and creative endeavors.

Efficiency in Content Creation

AI tools for image generation, such as DALL-E-2, significantly enhance efficiency in content creation. By automating the generation process based on textual prompts, these tools empower creators to explore multiple ideas rapidly, accelerating the content production pipeline.

Innovative Concept Exploration

The ability to generate images based on textual prompts opens up new avenues for innovative concept exploration. Creatives can experiment with diverse ideas and visualize concepts before committing to the time-consuming process of manual creation, fostering a more dynamic and experimental creative environment.

Time and Resource Savings

Traditional image creation can be resource-intensive and time-consuming. AI tools alleviate these challenges by providing quick and automated solutions. This not only saves time for creators but also optimizes resource allocation, allowing professionals to focus on higher-order creative tasks.

Enhanced Collaboration and Communication

AI tools facilitate enhanced collaboration and communication within creative teams. The visual representation of ideas through generated images bridges communication gaps, ensuring a clearer understanding of concepts among team members, clients, and stakeholders.

Adaptability Across Industries

The utility of AI tools for image generation extends across diverse industries. Whether in graphic design, marketing, entertainment, or research, these tools prove adaptable to different needs and requirements. The versatility of AI-generated images makes them a valuable asset in various professional domains.

Technologies Used in AI Art Image Generator

Behind the mesmerizing world of AI art image generator lies sophisticated technologies that drive the creative process. Understanding the underlying technologies provides insights into the capabilities and nuances of these generators, shedding light on the intricate mechanisms that contribute to the generation of captivating and visually stunning artworks.

Deep Learning Frameworks: The Backbone of AI Art Generators

AI art image generators rely on deep learning frameworks such as TensorFlow, PyTorch, and Keras. These frameworks provide the foundation for building and training complex neural network models, enabling the generators to learn and replicate artistic styles.

Convolutional Neural Networks (CNNs): Capturing Spatial Hierarchies

CNNs play a pivotal role in AI art image generators by capturing spatial hierarchies in images. These networks excel in recognizing patterns, textures, and features, allowing the generator to understand and recreate the intricate details that contribute to the overall artistic composition.

Transfer Learning: Leveraging Pre-trained Models

Transfer learning is a key technique in AI art image generators, enabling models to leverage knowledge gained from pre-trained models on large datasets. This approach accelerates the training process and enhances the generator's ability to generalize artistic styles across different inputs.

Generative Models: Crafting Realistic and Diverse Artworks

Generative models, including GANs, VAEs, and Stable Diffusion, form the core of AI art image generators. These models possess the capability to generate realistic and diverse artworks by learning the underlying distribution of artistic styles and content during the training phase.

Style Transfer Algorithms: Infusing Artistic Styles

Style transfer algorithms contribute to the artistic flair of generated images. By separating content and style representations, these algorithms allow the generator to infuse different artistic styles into the generated artworks, creating visually stunning and stylistically diverse outputs.

In conclusion, the world of AI-driven image generation is a fascinating intersection of technology and creativity. From the comparative analysis of GANs and Stable Diffusion to exploring alternatives, delving into the capabilities of DALL-E-2, understanding the utility of AI tools, and uncovering the technologies behind AI art image generators, each facet contributes to the rich tapestry of possibilities in the realm of artificial intelligence and creative expression.

Have Queries? Post in MW Educator Forum

Other Suggested Tools:

Content Rewriter-Plagiarism Remover

Instagram Trending Hashtags Finder

Youtube Keywords & Tags Finder

SEARCH

-

Our Popular Tools

- Image Quality Enhancer

- Image Background Remover

- Image Texts Extractor

- Instagram Hashtags with Research

- Youtube AI Title Generator

- Trending Youtube Tags Finder

- Youtube Tags Extractor

- Youtube Trends Research

- Youtube Backlinks Generator

- URL Shortener

- Keyword Research Tool

- Trending Keywords Suggestion

- Backlinks Checker & Analysis

- SEO Report Generator

- Plagiarism Checker

- QR Code Generator

- Prompt Engineering Tool

- Text to Image Generator